System Design Interview Concepts – Database Sharding

What is Sharding or Data Partitioning?

Sharding (also known as Data Partitioning) is the process of splitting a large dataset into many small partitions which are placed on different machines. Each partition is known as a "shard".

Each shard has the same database schema as the original database. Most data is distributed such that each row appears in exactly one shard. The combined data from all shards is the same as the data from the original database.

The two illustrations below shows a system with no sharding and another with a simple sharding scheme.

Note that the sharded architecture is transparent to the client application. The client application keeps on talking to the database shards(partitions) as if it was talking to a single database.

What scalability problems are solved by Sharding?

As more users are onboarded on your system, you'll experience performance degradation with a single database server architecture . Your read queries and updates will start become slower and your network bandwidth may be start to saturate . You'll probably start running out of disk space on your database server at some point.

Sharding helps to fix all the above issues by distributing data across a cluster of machines. In theory, you can have a huge number of shards thereby providing virtually unlimited horizontal scaling for your database.

Is each shard located on a different machine ?

Each shard may be located on the same machine (coresident) or on different machines(remote).

The motivation for co-resident partitioning is to reduce the size of individual indexes and reduce the amount of I/O (input/output) that is needed to update records.

The motivation for remote partitioning is to increase the bandwidth of access to data by having more RAM in which to store data, by avoiding disk access, or by having more network interfaces and disk I/O channels available.

What are some common Sharding or Data Partitioning Schemes ?

There are four common sharding strategies:

1. Horizontal or Range Based Sharding

In this case, the data is split based on the value ranges that are inherent in each entity. For example, the if you store the contact info for your online customers, you might choose to store the info for customers whose last name starts with A-H on one shard, while storing the rest on another shard.

The disadvantage of this scheme is that the last names of the customers may not be evenly distributed. You might have a lot more customers whose names fall in the range of A-H than customers whose last name falls in the range I-Z. In that case, your first shard will be experiencing a much heavier load than the second shard and can become a system bottleneck.

Nevertheless, the benefit of this approach is that it's the simplest sharding scheme available. Each shard also has the same schema as the original database. Your application layer is relatively simple because in most scenarios, you'll not need to combine data from multiple shards to answer any query.

It works well for relative non static data -- for example to store the contact info for students in a college because the data is unlikely to see huge churn.

2. Vertical Sharding

In this case, different features of an entity will be placed in different shards on different machines. For example, in a LinkedIn like application, an user might have a profile, a list of connection and a set of articles he has authored. In Vertical sharding scheme , we might place the various user profiles on one shard, the connections on a second shard and the articles on a third shard.

The main benefit of this scheme is that you can handle the critical part of your data (for examples User Profiles) differently from the not so critical part of your data (for example, blog posts) and build different replication and consistency models around it.

The two main disadvantages of vertical sharding scheme are as follows:

- Depending on your system, your application layer might need to combine data from multiple shards to answer a query. For example, a profile view request will need to combine data from the User Profile, Connections and Articles shard. This increases the development and operational complexity of the system.

- If your Site/system experiences additional growth then it may be necessary to further shard a feature specific database across multiple servers.

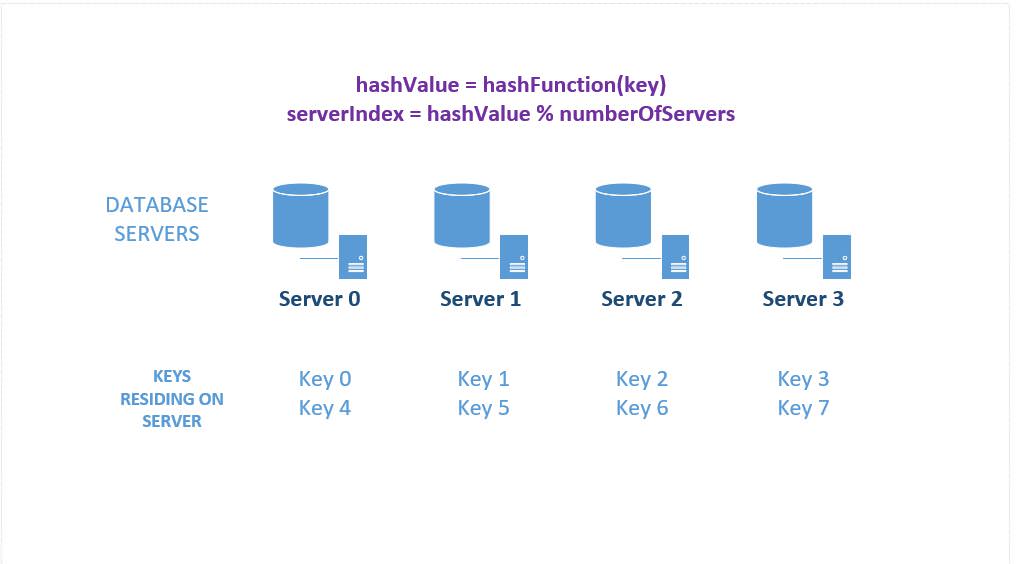

3. Key or hash based sharding

In this case, an entity has a value ( Eg. IP address of a client application) which can be used as an input to a hash function and a resultant hash value generated. This hash value determines which database server(shard) to use.

As a simple example, imagine you have 4 database servers and each request contained an application id which was incremented by 1 every time a new application is registered.

In this case, you can simply perform a modulo operation on the application id with the number 4 and take the remainder to determine which server the application data should be placed on.

The main drawback of this method is that elastic load balancing ( dynamically adding/removing database servers) becomes very difficult and expensive.

For example, if we wanted to add 6 more servers, majority of the keys would need to be remapped and migrated to new servers. Also, the hash function will need to be changed from modulo 4 to modulo 10.

While the migration of data is in effect , neither the new nor the old hash function is fully valid. So in effect, a large number of the requests cannot be serviced and you'll incur a downtime till the migration completes.

This problem is easily solved by Consistent hashing. Please read the Consistent Hashing article if you're not familiar with the concept since there's a good high probability you'll need to use it in one of your system design interviews.

4. Directory based sharding

Directory based shard partitioning involves placing a lookup service in front of the sharded databases. The lookup service knows the current partitioning scheme and keeps a map of each entity and which database shard it is stored on. The lookup service is usually implemented as a webservice.

The client application first queries the lookup service to figure out the shard (database partition) on which the entity resides/should be placed. Then it queries / updates the shard returned by the lookup service.

What does this loose coupling buy us ?

It enables us to solve the elastic scaling problem described in the previous section without using Consistent Hashing.

Here's how: In the previous example, we had 4 database servers and a hash function that performed a modulo 4 operation on the application ids. Now, if we wanted to add 6 more database servers without incurring any downtime, we'll need to do the following steps:

- Keep the modulo 4 hash function in the lookup service .

- Determine the data placement based on the new hash function - modulo 10.

- Write a script to copy all the data based on #2 into the six new shards and possibly on the 4 existing shards. Note that it does not delete any existing data on the 4 existing shards.

- Once the copy is complete, change the hash function to modulo 10 in the lookup service

- Run a cleanup script to purge unnecessary data from 4 existing shards based on step#2. The reason being that the purged data is now existing on other shards.

There are two practical considerations which needs to be solved on a per system basis:

- While the migration is happening, the users might still be updating their data. Options include putting the system in read-only mode or placing new data in a separate server that is placed into correct shards once migration is done.

- The copy and cleanup scripts might have an effect on system performance during the migration. It can be circumvented by using system cloning and elastic load balancing - but both are expensive.

Directory Based Sharding ( Courtsey: MSDN)

What are the common problems with Sharding?

The above sections might make it sound like Sharding is the ultimate Silver Bullet to solve all your scaling woes. However, this is not the case and there are various issues to be considered before choosing a sharding based solution.

Database Joins become more expensive and not feasible in certain cases

When all the data is located in a single database, joins can be performed easily. Now, when you shard the database, joins have to be performed across multiple networked servers which can introduce additional latency for your service.

Additionally, the application layer also needs additional level of asynchronous code and expception handling which increases the development and maintenance cost.

In certain situations, cross machine joins may not be an option if you need to maintain high availability SLA for your service.

Then the only option left is to de-normalize your database to avoid cross server joins. While this scheme helps with system availability, you now have to contend with keeping all the data in the different shards consistent. Your application layer logic will probably needs tol change significantly to deal with inconsistent data from different shards.

Please see the post on CAP theorem which explores the trade off between Consistency and Availability.

Sharding can compromise database referential integrity

Most RDBMS do not support foreign keys across databases on different database servers. This means that applications that require referential integrity often have to enforce it in application code and run regular SQL jobs to clean up dangling references once they move to using database shards.

If you're in the NoSQL land, this is less of an issue because you've already taken a hit for referential integrity and consistency in your application layer anyways.

As a mitigation for consistency and referential integrity issues , you should minimize operations that affect data in multiple shards.

If an application must modify data across shards, evaluate whether complete data consistency is actually required. Instead, a common approach in the cloud is to implement eventual consistency. The data in each partition is updated separately, and the application logic must take responsibility for ensuring that the updates all complete successfully, as well as handling the inconsistencies that can arise from querying data while an eventually consistent operation is running.

Database schema changes can become extremely expensive

In some situations as your user base grows, the schema might need to evolve. For example, you might have been storing user picture and user emails in the same shard and now need to put them on different shards. This means that all your data will need to be relocated to new location. This can cause down-times in your system.

A potential solution is to use directory based partitioning or consistent hashing to solve this problem.

When to use the Sharding in a System Design Interview?

Whew ! That was a lengthy article - but there's one last thing you need to understand -

When to use sharding ?

Use this pattern when a data store is likely to need to scale beyond the resources available to a single storage node, or to improve performance by reducing contention in a data store.

For example, if you're designing the next Netflix, you'll need to store and provide low latency reads to a huge number of video files. In this case you might want to shard by the genre of the movies. You'll also want to create replicas of the individual shards to provide high availability.

The primary focus of sharding is to improve the performance and scalability of a system, but as a by-product it can also improve availability due to how the data is divided into separate partitions. A failure in one partition doesn't necessarily prevent an application from accessing data held in other partitions, and an operator can perform maintenance or recovery of one or more partitions without making the entire data for an application inaccessible.